Overview

Create GCP compute commitments across an entire project running a fleet of globally distributed resources using my latest bash script. The script is configured to purchase 12-month committed resources based on multiple required input values including project-id, machine-type, commitment-type, # of vcpu's and amount of memory. Ideally, I would have preferred to implement this using Terraform as that is how this environment is managed. While the google_compute_reservation module is available, there is no module for compute commitments at the time of this writing which is what's required for this scenario. I didn't need to configure compute reservations so that capability will not be covered here.

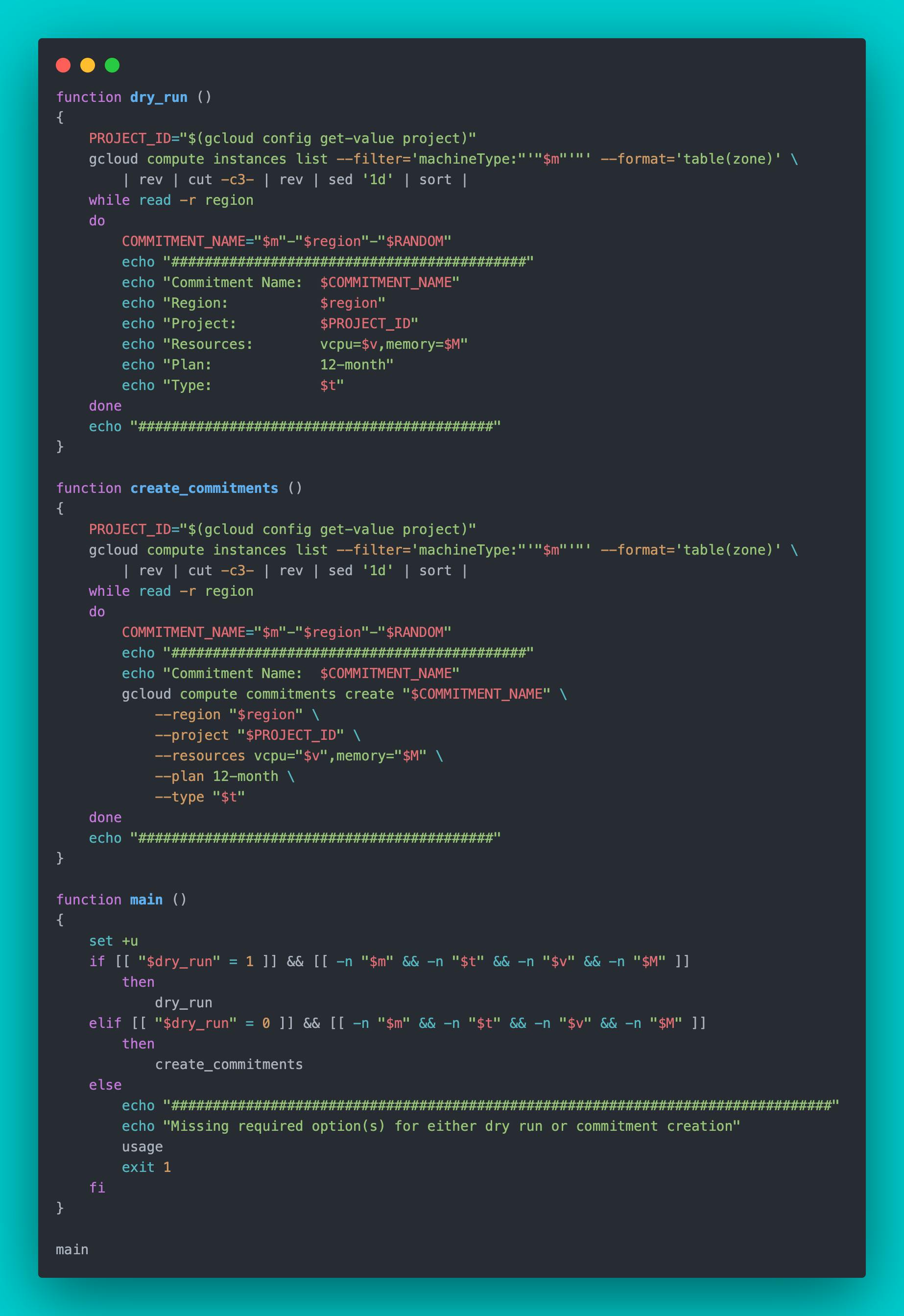

Script Functionality

Link to script: gcp_committed_resources.sh

Based on the machine-type value entered (i.e. e2-standard-2), the script will loop through all instances across your project running that machine type and create unique-named commitments for each instance using the following format: <machine-type>-<region>-<random#>, example: e2-standard-2-us-west4-19289. This prevents duplicate commitment names in regions where you might be running multiple instances of the same machine type. The intent is to have each created commitment represent an individual VM's resources from within the residing region.

The script features a dry-run function that allows you to see the results of your input values and how they will be applied to the creation of commitments. Examples of potential conditionals against some inputs (i.e. Commitment Type, vcpu's and memory) can be uncommented and set for your use case. This can prevent allocating too many resources for a particular commitment whether this be a user input error (i.e. fat finger) or an oversight on what's being used by an existing machine type.

The Bash getopts built-in command parses options and enforces input arguments on these flags when a dry run or commitment creation is run: -p project-id, -m machine-type, -t commitment=type, -v vcpus, and -M memory. A dry run can be performed by running these options after passing any set conditionals. The changes will be applied and commitments created by running the dry run values in addition to appending the -G option which is set to dry_run=0 resulting in the implementation of the create_commitments function.

Additional flags are available to assist with capturing details needed for the dry run and subsequent commitment creation. Here's the output of the usage function by invoking the -h (--help flag or null options can be applied for the same output):

./gcp_committed_resources.sh -h

################################################################################

Option descriptions:

-p Sets project-id

-m Sets machine type of current running instances for commitments. Double check as gcloud filter is not exact match!

-t Sets Commitment Type, for available options run -T

-v Sets the amount of vcpu's for each committed resource per zone

-M Sets the amount of Memory for each committed resource per zone, default is GB if not specified

-G Caution! Creates the commitments. Always perform a dry run first without this option!

-P Lists all existing project-id's to select from

-L Lists all instances in set project and includes machine type, vm name & zone

-T Lists possible commitment type options, for details see: https://cloud.google.com/compute/docs/instances/signing-up-committed-use-discounts#commitment_types

-C Lists current compute commitments

Usage: [-p project-id] [-m machine-type] [-t commitment-type] [-v vcpus] [-M memory] [-G create-commitments]

Dry run ex.: ./gcp_committed_resources.sh -p my-project -m e2-standard-2 -t general-purpose-e2 -v 2 -M 8

Creation ex.: ./gcp_committed_resources.sh -p my-project -m e2-standard-2 -t general-purpose-e2 -v 2 -M 8 -G

NOTE: Check Quotas on Commitments against your regions w/ Limit: 0 vcpu's and submit requests for increases here or you'll encounter gcloud crashed (TypeError)

I've successfully run this script from one of the GCP projects in my environment, purchasing commitments for 40x globally distributed compute instances running two different machine types. Below are the details included in the pre-work analysis steps I performed and a guide with some example outputs from my implementation.

Pre-Work Analysis

Cost

According to Google Cloud docs:

When you purchase vCPUs and/or memory on a 1-year commitment, you get the resources at a discount of 37% over the on-demand prices

This is in alignment with the GCP calculator estimates I ran in a cost savings analysis against my environment. Based on this, my company should see substantial enough savings 💰 for these 1-year commitments associated with 40x instances on two different machine types that are running consistently 24/7/365 to make this a worthwhile implementation.

I recommend performing a similar cost analysis exercise in your environment if you're pursuing purchasing compute commitments. If you're managing multiple GCP projects with a central billing account, then you might be interested in my blog post on performing a GCP BigQuery Expression to capture monthly invoice costs based on labels assigned to resources to assist in your cost analysis.

Google also has a Recommender tool that evaluates the usage of your VM's over 30 days to determine whether or not they're eligible for committed use discounts (CUD) by checking the following:

The VM was active for the entire duration of the 30 days.

The VM's SKU is part of an eligible committed use discount bucket.

The VM's usage was not already covered by an existing commitment.

Machine Type

Set your project-id:

./gcp_committed_resources.sh -p my-projectDetermine the machine type used by your compute instances in a specified project by running the script with the

-Lflag.Get all machines sorted by machine type:

./gcp_committed_resources.sh -LGrep for a list of specific machine types:

./gcp_committed_resources.sh -L | grep e2-standard-2

Once you have the machine types listed that you want to purchase committed resources for, cross-check them against the GCP list of commitment types that are eligible for commitments.

If your machine type is eligible for commitments, then review the page associated with your specific compute engine family to capture the # of vpcu's and amount of memory for that machine type. For example, the

e2-standard-2are in the General purpose machines doc with the following specs:

| Machine types | vCPUs* | Memory (GB) | Max number of persistent disks (PDs)† | Max total PD size (TB) | Local SSD | Maximum egress bandwidth (Gbps)‡ |

e2-standard-2 | 2 | 8 | 128 | 257 | No | 4 |

Note: If the majority of your compute instances are running the same machine type in the same region, then this script may not be well suited for your use case. In that scenario, I would probably calculate the total vcpu's and memory needed for a particular commitment type and create a single commitment in that region running a the gcloud compute commitments create command once.

Commitment & CPU Quota Limits

Before proceeding with creating the new commitments, I highly recommend reviewing both the Commitment and CPU Quota limits on the regions you're working with. I didn't do this step during my pre-work and was affected by a generic gcloud error that broke my while loop while running the script against my second set of machine types which looked like this:

###########################################

Commitment Name: e2-standard-2-asia-south2-22948

ERROR: gcloud crashed (TypeError): expected string or bytes-like object

If you would like to report this issue, please run the following command:

gcloud feedback

To check gcloud for common problems, please run the following command:

gcloud info --run-diagnostics

Searching a few posts online led me to discover it was a commitment quota limit affecting a handful of regions in my deployment. Here are a couple references to Google docs that can help you avoid the gcloud crash that I ran into:

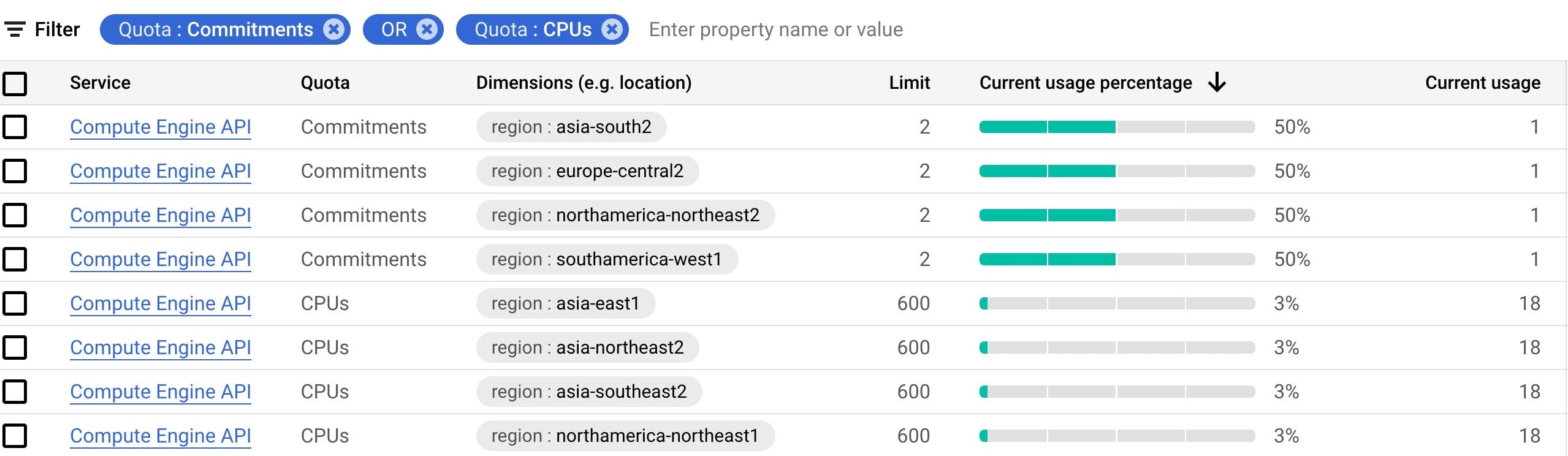

Cross-reference the ./gcp_committed_resources.sh -L captured in the Machine Type section against the quota limits set in your regions. If needed, submit a request for quota increases that will cover the number of commitments and/or resources (ie CPU) you'll be creating for that region. If you have multiple instances of the same machine type in the same region, be sure to request quota increases to reflect that.

Here's an example of what a commitment quota limit increase response from Google looks like after submitting the request using the cloud console:

Your quota request for my-project has been approved and your project quota has been adjusted according to the following requested limits:

+-------------+--------------------------------+-------------------------+-----------------+----------------+

| NAME | DIMENSIONS | REGION | REQUESTED LIMIT | APPROVED LIMIT |

+-------------+--------------------------------+-------------------------+-----------------+----------------+

| COMMITMENTS | region=asia-south2 | asia-south2 | 2 | 2 |

| | | | | |

| COMMITMENTS | region=europe-central2 | europe-central2 | 2 | 2 |

| | | | | |

| COMMITMENTS | region=northamerica-northeast2 | northamerica-northeast2 | 2 | 2 |

| | | | | |

| COMMITMENTS | region=southamerica-west1 | southamerica-west1 | 2 | 2 |

+-------------+--------------------------------+-------------------------+-----------------+----------------+

After approved, Quotas can take up to 15 min to be fully visible in the Cloud Console and available to you.

It took about 20 minutes for the change to appear for me in the Quota service of the cloud console.

Here's a screenshot of what it looked like after the quota limits were increased and I had successfully created commitments for those affected regions:

Implementation

Purchasing commitments without attached reservations is the Google doc I followed for my scenario which includes the required permissions for applying these changes.

Ensure to run all the "Lists" options defined in the Usage as part of your pre-work analysis before the implementation. These should be used to help build out your dry run results followed by the actual implementation with the

-Gflag set.Perform a dry run with the following options set:

[-p project-id] [-m machine-type] [-t commitment-type] [-v vcpus] [-M memory]Example dry run:

❯ ./gcp_committed_resources.sh -p my-project -m e2-standard-2 -t general-purpose-e2 -v 2 -M 8 Updated property [core/project]. Project set to: my-project ########################################### Commitment Name: e2-standard-2-asia-east1-6065 Region: asia-east1 Project: my-project Resources: vcpu=2,memory=8 Plan: 12-month Type: general-purpose-e2 ###########################################

Perform the implementation of the create commitments function by appending the

-Gflag to your dry run options:[-p project-id] [-m machine-type] [-t commitment-type] [-v vcpus] [-M memory] [-G create-commitments]Example successful commitment creation run:

❯ ./gcp_committed_resources.sh -p my-project -m e2-standard-2 -t general-purpose-e2 -v 2 -M 8 -G Updated property [core/project]. Project set to: my-project ########################################### Commitment Name: e2-standard-2-asia-east1-28472 Created [https://www.googleapis.com/compute/v1/projects/my-project/regions/asia-east1/commitments/e2-standard-2-asia-east1-28472]. --- autoRenew: false category: MACHINE creationTimestamp: '2023-03-31T07:56:32.947-07:00' endTimestamp: '2024-04-01T00:00:00.000-07:00' id: 'my-id' kind: compute#commitment name: e2-standard-2-asia-east1-28472 plan: TWELVE_MONTH region: https://www.googleapis.com/compute/v1/projects/my-project/regions/asia-east1 resources: - amount: '2' type: VCPU - amount: '8192' type: MEMORY selfLink: https://www.googleapis.com/compute/v1/projects/my-project/regions/asia-east1/commitments/e2-standard-2-asia-east1-28472 startTimestamp: '2023-04-01T00:00:00.000-07:00' status: NOT_YET_ACTIVE statusMessage: The commitment is not yet active (its startTimestamp is in the future). It will not apply to current resource usage. type: GENERAL_PURPOSE_E2 ###########################################

As soon as the committed resources have been purchased they'll display as "Pending" in the cloud portal and "NOT_YET_ACTIVE" from the gcloud cli.

Example output of current commitments list using

-Cflag immediately post-change:❯ ./gcp_committed_resources.sh -C NAME REGION END_TIMESTAMP STATUS e2-standard-2-us-central1-7087 us-central1 2024-04-01T00:00:00.000-07:00 NOT_YET_ACTIVE e2-standard-2-europe-west1-13832 europe-west1 2024-04-01T00:00:00.000-07:00 NOT_YET_ACTIVE e2-standard-2-us-west1-21069 us-west1 2024-04-01T00:00:00.000-07:00 NOT_YET_ACTIVE e2-highcpu-8-asia-east1-14956 asia-east1 2024-04-01T00:00:00.000-07:00 NOT_YET_ACTIVE e2-highcpu-8-asia-east1-20966 asia-east1 2024-04-01T00:00:00.000-07:00 NOT_YET_ACTIVE e2-standard-2-asia-east1-28472 asia-east1 2024-04-01T00:00:00.000-07:00 NOT_YET_ACTIVECommitments will move into an Active state at midnight the following day per Google doc:

After purchasing a commitment, the commitment is effective starting at midnight the following day. For example, a commitment purchased on Monday afternoon at 3 PM US and Canadian Pacific Time (UTC-8, or UTC-7 during daylight saving time) becomes effective on Tuesday at 12 AM US and Canadian Pacific Time (UTC-8 or UTC-7). The discounts are automatically applied to applicable instances in the region you specified, and to the projects in which those discounts are purchased.

Output using

-Cflag 24+ hours post-change:❯ ./gcp_committed_resources.sh -C NAME REGION END_TIMESTAMP STATUS e2-standard-2-us-central1-7087 us-central1 2024-04-01T00:00:00.000-07:00 ACTIVE e2-standard-2-europe-west1-13832 europe-west1 2024-04-01T00:00:00.000-07:00 ACTIVE e2-standard-2-us-west1-21069 us-west1 2024-04-01T00:00:00.000-07:00 ACTIVE e2-highcpu-8-asia-east1-14956 asia-east1 2024-04-01T00:00:00.000-07:00 ACTIVE e2-highcpu-8-asia-east1-20966 asia-east1 2024-04-01T00:00:00.000-07:00 ACTIVE e2-standard-2-asia-east1-28472 asia-east1 2024-04-01T00:00:00.000-07:00 ACTIVE

If you happen to run into a gcloud error such as the one referenced in the Commitment & CPU Quota Limits section above, you won't be able to re-run the script as you'll end up creating duplicate commitments for resources you've already purchased commitments for creating unintended additional cloud spend 💸 I've created another script for these scenarios with

_manualappended to the name here: gcp_committed_resources_manual.shThe script performs a one-time loop through an array of defined regions that missed getting commitments created.

The region array/list, PROJECT_ID, vcpu, memory, and commitment type all need to be hard coded for your use case.

If you have duplicate machine types hosted in any of the regions listed in the array, you'll need to account for that. You can rerun the script to create commitments in those scenarios since commitment names are appended with the

$RANDOMinternal Bash function.Perform a dry run with:

./gcp_committed_resources_manual.shPerform the implementation with:

./gcp_committed_resources_manual.sh --go

❗️❗️Warning ❗️❗️ Extra caution should be taken using these scripts as you cannot back out of purchasing committed resources. When creating GCP commitments you are agreeing to pay for the resources assigned to your commitments under the length of time defined in the plan, in this case, 12 months.

Conclusion

I've successfully run these scripts from one of the GCP projects in my environment, purchasing commitments for 40x globally distributed compute instances running two different machine types. This should result in a discount of 37% over on-demand pricing resulting in worthwhile savings on resources running in the project.

While running the script to create commitments for the 2nd set of machine types I encountered the gcloud error as mentioned in the Commitment & CPU Quota Limits section above. I was able to successfully create commitments for these remaining regions using the secondary Manual script referenced in Step 5. of the Implementation section. To avoid this in the future, I'll be taking a closer look at Quota limits for my regions so I can run the primary script once through.

At the expiration of a commitment plan, you could renew your commitments with a simple bash script that loops through all the existing commitments capturing the Commitment Name and associated region using the gcloud compute commitments list command or using the -L flag in the script and apply these values to the gcloud compute commitments update $COMMITMENT_NAME --auto-renew --region=$region command referenced here.